Prerequisites:

Prior understanding of containerization with docker i.e. creating containers with docker file.

It is advised that you have a good understanding of how docker images work.

Ensure that you have a Docker Desktop suitable for your computer installed.

Introduction:

Generally, everyone loves easier ways of doing things; from basic home appliances such as blenders instead of manual grinding, cars instead of walking, to CI/CD pipelines instead of manual integrations and deployments; similarly, containerization of applications is not exempted. Imagine you have a large enterprise application, and this app has been split into different docker containers( a container is an instance of a docker image) to make it scalable(say payment, analytics, sales e.t.c), also, the app contains different dependencies: database, admin manager, and so on; and you want to run the application as a whole; manually, you'd probably have to run each feature using the `docker run IMAGE_NAME` command in your terminal and indicate the network ports, volumes and so on for each container for them to work together. That would be exhausting right? Yeah. Now the interesting part. What docker-compose does is make the stress of running these commands individually go away. In this tutorial, using a simple use case, you'll learn how this unique tool will make your development and containerization process faster.

Containerization

Consider a scenario where you've built your amazing backend solution and tested it in your local environment, ready to deploy, only for your teammate to test the app and find out that it doesn't work on his computer. Then it becomes a dilemma for your development process. This is the problem containerization solves, it's a process of packaging your application or service into a platform-agnostic container such that it runs the same way on similar development or production environments that support containerization.

What is Docker?

Docker is a containerisation tool commonly used to package your application in an isolated environment.

What is a Docker Image?

A docker image is a template or blueprint of the contents or dependencies used in a docker container. For instance, a PostgreSQL image is used as a blueprint to build a PostgreSQL database container.

What are Containers?

Docker containers are isolated environments where different aspects of our apps, services, and dependencies are packaged and can be connected to all other app services. A docker container is considered an instance of a docker image.

What is Docker Compose?

Docker compose is a deployment tool, handled by a YAML file, that houses all your containers and runs them concurrently, and as a result, makes development faster. Meaning, for instance, if your app needs to use a database, it will simply use the one declared in the docker-compose file. All these are done by a single command i.e. docker compose up.The YAML file contains all the details about the different containers that you want to run.

Common Keywords used in Docker Compose files

The major keyword in a docker-compose file is the services. Under the services, different containers are outlined—for example, a database container, a pgadmin or even a service(in a microservices architecture). The service can be named anyhow you deem fit, but it's a good practice to name it according to its use case. For example, a database service can be simply named database.

Under each service section, different parameters are outlined. Some of them include:

container_name: This parameter is the name of the container you're creating. By default, the container takes up the name of the service but can be overridden with thecontainer_nameparameter.image: The image parameter is used to indicate the docker image used as the footprint for that container. The image is often gotten from the docker hub or any other private repository like Amazon's ECR. It can also be your locally created image(created using the docker file).environment: Under this parameter, environment variables are outlined. Environment variables include database usernames, passwords e.t.c. depending on the service used.ports: This is used to expose ports on which a container is allowed to run. Note that, no two containers can run on the same ports. Example is8081:8081. This port number indicates that the running port8081on your local machine is mapped to the port8081on the container.The code snippet below is a simple set of docker-compose instructions for a Postgres database container.

database: container_name: postgres image: postgres environment: POSTGRES_DB: YOUR_POSTGRES_DATABASE_NAME POSTGRES_PASSWORD: YOUR_POSTGRES_DB_PASSWORD POSTGRES_USER: YOUR_POSTGREES_DB_USERNAME PG_DATA: /data/postgres ports: - "5432:5432"

Example:

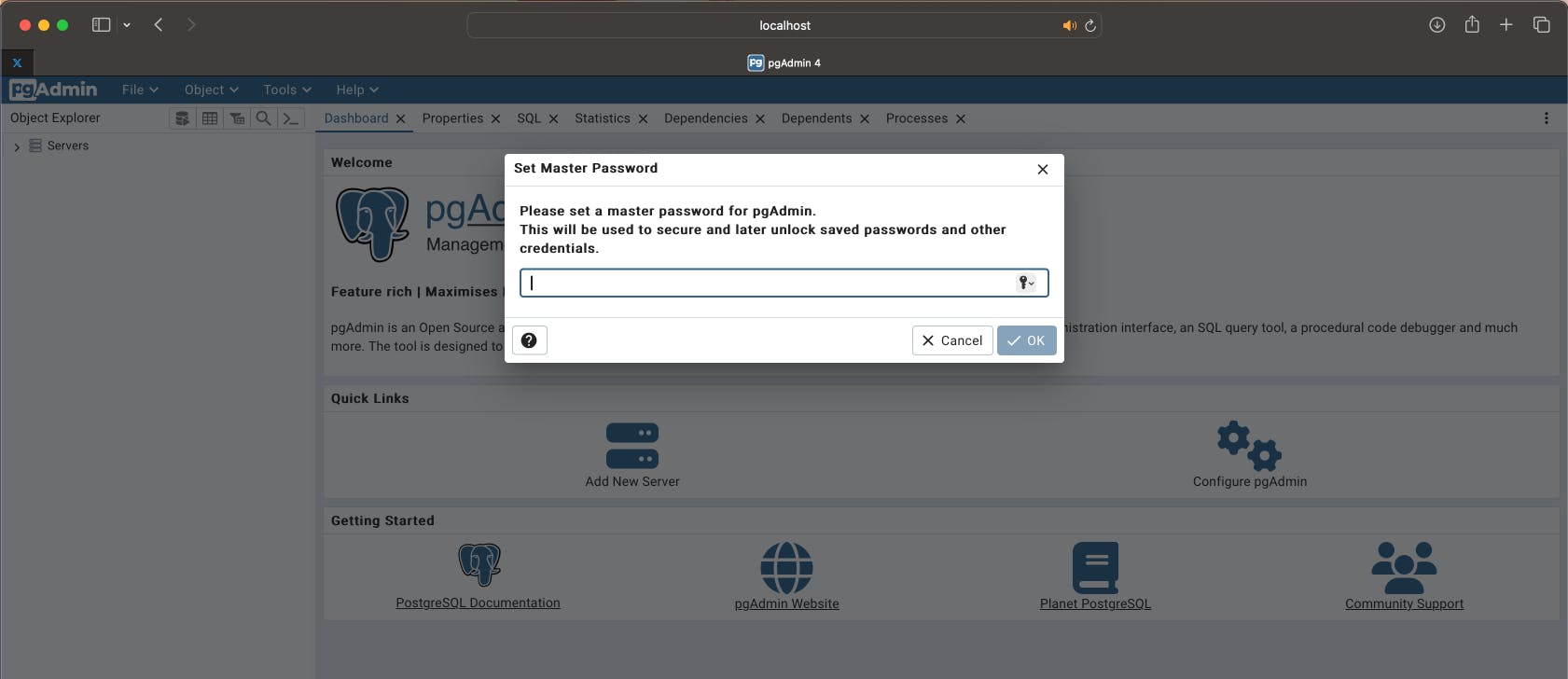

In this example, we're going to create a simple application that runs a database management tool(pgadmin). The app runs pgadmin as a container in docker-compose. At the end of the process, you can run the pgadmin on a specific port you pick. You'll have something like this:

Step 1: Create your backend project and enable the docker-compose dependency depending on the project you're working with. If you're running a spring-boot project, enable the dependencies as seen below :

- Maven build: In the dependencies tag, add the following to enable docker-compose:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-docker-compose</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

- Gradle build: In the dependencies block, add the following:

dependencies{

implementation("org.springframework.boot:spring-boot-docker-compose")

}

Rebuild your project for the dependency change to take effect.

Step 2: Create a docker compose file.

In the root folder of your project, create a file with the name docker-compose.yml . This is the file that will contain your docker containers.

Step 3: Write your compose commands.

In your docker-compose file, add the following set of instructions:

services:

pgadmin:

container_name: pgadmin

image: dpage/pgadmin4

environment:

PGADMIN_DEFAULT_EMAIL: ${PGADMIN_DEFAULT_EMAIL:-pgadmin@pgadmin.org}

PGADMIN_DEFAULT_PASSWORD: ${PGADMIN_DEFAULT_PASSWORD:-admin}

PGADMIN_CONFIG_SERVER_MODE: 'False'

ports:

- "5051:80"

restart: unless-stopped

The instructions above should be familiar to you by now as they've been explained above. The image section above is specific to the pgadmin, which can be found on the docker hub. Docker compose by default assumes that the image assigned is from dockerhub.io, so it recognises that automatically, and that is why the image link is not always written in full. Also, the ports, indicate that the pgadmin port is mapped from port 5051 on the local machine to port 80 on the container.

Step 4: Run docker compose.

Before running the docker-compose, make sure your docker desktop is open and you're signed in. Next, go to your terminal, make sure you're in the root path and run the following command:

docker compose up

Running the above command will get all the containers declared in the docker-compose file running concurrently.

Check your docker desktop to verify that it's running.

The image above shows that the pgadmin container is running as expected.

Step 5: Test your container.

Go to your browser and run the following URL: http://localhost:5051 . A successful opening of the pgadmin site means your docker is successfully running.

Beyond running single containers and services, docker-compose is most efficient at running multi-containerized applications, like applications with microservices architecture, where each part or major feature of the application forms an independent unit or service. As a result, having all these containers in one place, docker-compose helps them to form a cohesive unit and work together efficiently and irrespective of the development environment.

Conclusion

In this tutorial, you have learnt a simple illustration of how docker-compose works, albeit on a single service. The major thing to note is that development becomes faster, and easier with the use of docker-compose, especially when working on applications with multiple containers. This reduces the stress of having to run each container independently and manually writing commands to run them individually. The more the number of containers you are working with, the more you'll come to appreciate the power of docker-compose. You can find the link to the GitHub repository here. I hope this guide has proven helpful, kindly like it or give a clap. Cheers!